Test Case

This document describes a very simple Highly Available (HA) setting based on a simple Primary/Backup configuration.Description

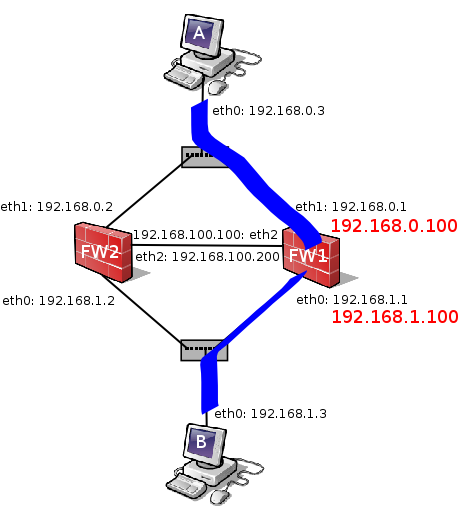

Our test case is composed of two hosts acting as firewalls: FW1 and FW2, and two hosts acting as desktops: A and B. The network schema is detailled in the following picture:

Initially, we consider that FW1 holds the virtual IP's that are highlighted in red on the picture. The setting deployed follows the classical Primary/Backup approach where FW1 is active and FW2 waits for the failure of the active host. The software selected to automate the takeover process of the virtual IP's is keepalived.

Ruleset

The filtering policy implemented in FW1 and FW2 is the following:

[1] iptables -P FORWARD DROP

[2] iptables -A FORWARD -i eth0 -m state --state ESTABLISHED,RELATED -j ACCEPT

[3] iptables -A FORWARD -i eth1 -p tcp --syn -m state --state NEW -j ACCEPT

[4] iptables -A FORWARD -i eth1 -p tcp -m state --state ESTABLISHED -j ACCEPT

[5] iptables -A FORWARD -m state --state INVALID -j LOG

[6] iptables -I POSTROUTING -t nat -s 192.168.0.3 -j SNAT --to 192.168.1.100

As you can observe, the default policy in the forward chain is drop [1]. The firewall cluster accepts new TCP connections started from host A to host B [3,4] and only established in the opposite direction [2]. Moreover the cluster performs Source NAT to connections going from host A to B [6]. Whatever packet that does not fulfill the policy in the forward chain is logged [5].

Generating traffic

In order to test the setting, we start a SSH session from host A to B.

Failure and Takeover

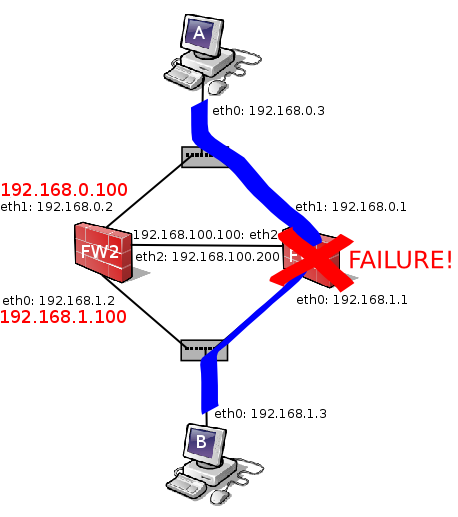

Once the FW1 experiments a failure, FW2 takes over the virtual IP's.

Problems

Theoretically, the SSH connection should be forwarded by FW2 but the new active host does not know anything about such connection and consider that it is a new connection. Unfortunately such connection is actually established and cannot match the appropiate rule to let the packet go forward [2]. The packets drop will be logged [5].

Solution: Conntrackd

In order to overcome the problem, we have to deploy conntrackd in FW1 and FW2. The daemon replicates the state of the connection forwarded by the active node so that the backup can takeover the connection in an appropiate way. We can dump the status of the connections forwarded by the active node:

(shell FW1)# conntrackd -i tcp 6 ESTABLISHED src=192.168.0.3 dst=192.168.0.100 sport=51356 dport=22 src=192.168.0.100 dst=192.168.1.3 sport=22 dport=51356 [ASSURED] mark=0 [active since 5s]

On the backup node we will observe that the connection has been replicated:

(shell FW2)# conntrackd -e tcp 6 ESTABLISHED src=192.168.0.3 dst=192.168.0.100 sport=51356 dport=22 src=192.168.0.100 dst=192.168.1.3 sport=22 dport=51356 [ASSURED] mark=0 [active since 2s]

Note: Computer clipart by Jakub Steiner under CC share-alike license, you can get them from http://jimmac.musichall.cz/